UDPATED

You've heard the rumbles about the amount of testing in schools, teaching to the test, test cheating scandals, testing boycotts by parents, and the use of test results to evaluate teachers. Now there is a move to reduce the volume and increase the quality of assessments, at the same time that districts are being asked to create more pre- and post-tests for every grade and subject for "student growth" measures used in educator evaluation. This movement is layered over state accountability testing requirements, and the federal ESEA requirement of testing ELA and math (grades 3-8 and once in high school) and science (once in elementary, middle, and high school).

On October 15, the Council of Chief State School Officers (CCSSO) and the Council of the Great City Schools (CGCS) issued a

joint statement of commitments on high-quality assessments with

statements of support from education leaders of states and large-city schools. While the organizations are not moving away from "assessments given at least once a year," they are saying that assessments should be

- high quality

- part of a coherent system and

- meaningful

What does that mean? In 2013, CCSSO issued its definition of "

high quality" for ELA and math college- and career-ready standards, as did the U.S. Department of Education in its

requirements for ESEA flexibility waivers ("high-quality assessment" is required in principle 1). As to

coherence:

Assessments should be administered in only the numbers and duration that will give us the information that is needed and nothing more.

And

meaningful relates to improving instruction and informing parents - "timely, transparent, disaggregated, and easily accessible."

Both organizations are inventorying the assessments given. CGCS preliminarily reported that students take 113 assessments between K-12, most in 11th grade. The ultimate plan is to

Streamline or eliminate assessments that are found to be of low quality, redundant, or inappropriately used.

In the accompanying webinar there was explicit reference to eliminating multiple tests with overlapping purposes, or assessments that are no longer aligned to mastery of the content being measured.

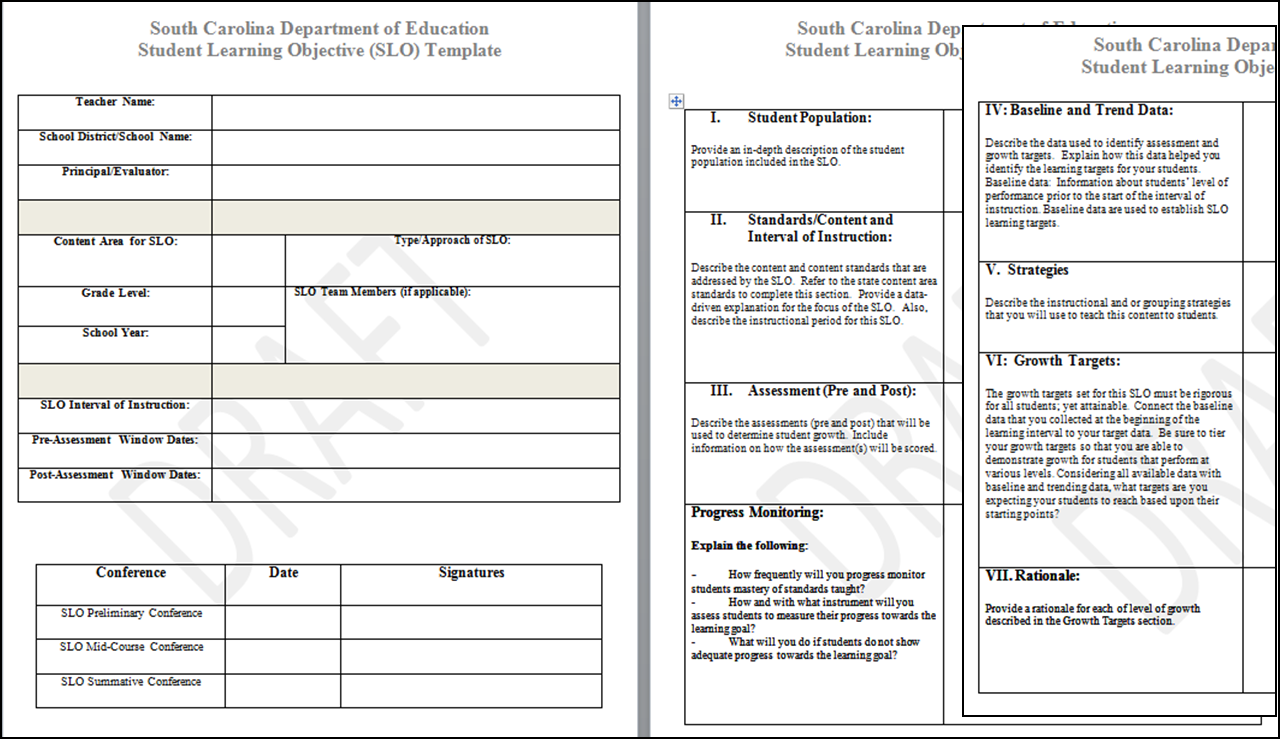

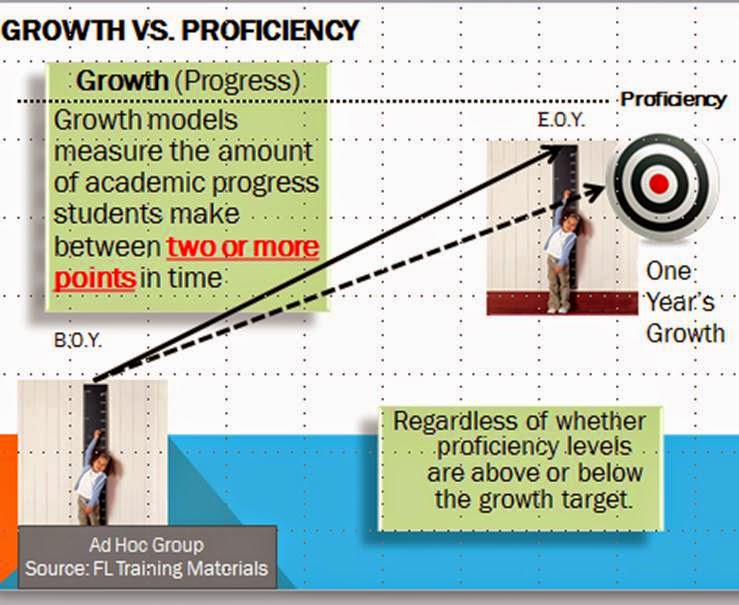

Meanwhile, districts are scrambling for student growth measures to include in teacher evaluation student learning objectives (SLOs) for the ironically named "non-tested" grades and subjects. And the "next generation" innovators are moving away from one summative test towards learning progressions with mastery assessments. EdWeek

reports New Hampshire is proposing a pilot in which the summative SMARTER Balanced Assessments would be used in some grades/subjects but the state-developed PACE performance assessments would be used in others. Linda Darling-Hammond, Gene Wilhoit, and Linda Pittenger recently published a call for a new paradigm for accountability and assessment (here are links to the

long version and the

brief).

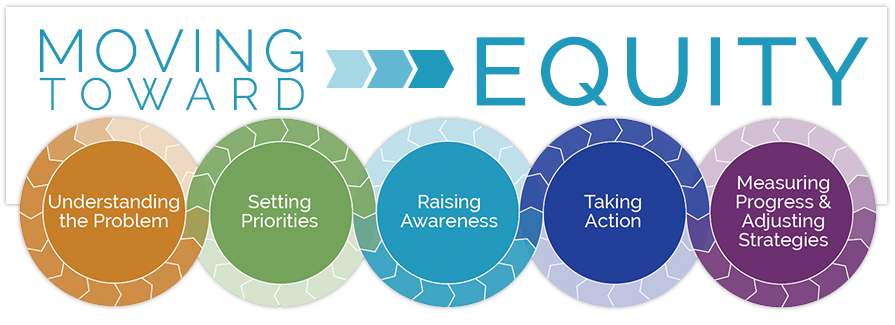

So here we are with the sometimes conflicting purposes of wanting better student measures, better information on teacher effectiveness, less "seat time," more "personalized" learning, and more meaningful accountability systems.

Meanwhile in SC, we've come up with an innovative way to avoid having teachers "teaching to the test" - don't select the test until ?November? The September intent to award a statewide contract to ACT was protested by DRC. The hearing started October 23. No one is predicting when a decision will be made on which assessments students will take in Spring 2015.

UPDATED 10/27: For an interesting take on what might be behind all of this, check out the EdWeek Politics K-12 team's

post.

And apparently the ACT-DRC procurement protest hearing went late on Friday (10/14); SCDE posted a "

Supplemental Statement" objecting to a few things.

The Consortium of Large Countywide and Suburban Districts (which includes Greenville, SC) has also come out with a

letter to Secretary Duncan in support of fewer summative assessments of higher quality.

This afternoon (Thursday, November 13) the U.S. Department of Education posted the guidance on applications for renewal of state flexibility under the Elementary and Secondary Education Act and No Child Left Behind. States have until March 31, 2015, to file their applications for renewal. Those Window 1 and 2 states (which includes SC) that want expedited review must submit by January 30, 2015.

This afternoon (Thursday, November 13) the U.S. Department of Education posted the guidance on applications for renewal of state flexibility under the Elementary and Secondary Education Act and No Child Left Behind. States have until March 31, 2015, to file their applications for renewal. Those Window 1 and 2 states (which includes SC) that want expedited review must submit by January 30, 2015.

Meanwhile in SC, we've come up with an innovative way to avoid having teachers "teaching to the test" - don't select the test until ?November? The September intent to award a statewide contract to ACT was protested by DRC. The hearing started October 23. No one is predicting when a decision will be made on which assessments students will take in Spring 2015.

Meanwhile in SC, we've come up with an innovative way to avoid having teachers "teaching to the test" - don't select the test until ?November? The September intent to award a statewide contract to ACT was protested by DRC. The hearing started October 23. No one is predicting when a decision will be made on which assessments students will take in Spring 2015.